Programming Language Experiments

This page describes one particular strand of thinking and experimentation I’ve done over the past few years, exploring ideas around programming-language and virtual-machine design and implementation.

I’m particularly interested in the potential of partial evaluation in reflective systems; at the end of the page is an outline of some of the topics I want to work on in future.

“Perhaps the whole distinction between program and programming language is a misleading idea, and future programmers will see themselves not as writing programs in particular, but as creating new languages for each new application.”

– Hal Abelson, in the foreword to Essentials of Programming Languages

Background

Ever since a flash of midnight inspiration in 2004 that had been building up over the course of the preceding couple of years, I’ve been actively exploring language design ideas around reflection, metaprogramming, pattern-matching, object-orientation, and concurrency when I can. (If that sounds like an intractably large piece of design space, that’s because it is.)

I originally wanted something close to Smalltalk-72 and the π-, blue-, ambient- and join-calculi, for their accounts of message-passing and of concurrency, communication, and location. Recently, however, I’ve moved away from the pure concurrent calculi and toward a more traditional eager functional language, but placed in as well-developed a reflective environment as I can construct. I believe reflection to be absolutely key, not only to personal computing, but to the construction of maintainable long-term software projects in general.

I’ve a whole blog category devoted to it here, though it’s languished a bit recently.

The code itself is scattered throughout this Git repository, which you can clone and browse in the usual way.

git clone https://gitlab.com/tonyg/smalltalk-tng

Because of the sporadic nature of the work, there’s no coherent overview of it all yet, so I’ll do my best to get across the intent behind some of the pieces that make up the whole, organised roughly by the layout of the code repository.

Notes on design and implementation

…/doc contains notes on design and implementation, mostly fairly out-of-date, but containing the odd interesting thought. There are also some discarded experiments in concrete syntax here.

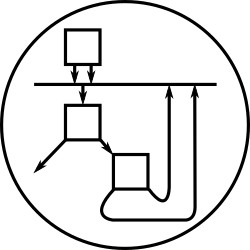

Partial Evaluation

I’m enthusiastic about the potential of using partial evaluation techniques in settings where they haven’t been widely applied yet: with JIT-style type feedback to provide a poor-man’s dynamically optimising compiler; with reflective metamodels to provide a clean yet efficient approach to many-language, single-runtime environments; with network stacks to regain efficiency without losing clarity and flexibility; with libraries structured around stream fusion for efficient data structure transformation; with IDEs and interactive proof assistants to help programmers reason about their code.

So far, I’ve implemented a partial evaluator for a core-scheme-like language following “Partial Evaluator as a Compiler for Reflective Languages”, Asai, Masuhara, Matsuoka and Yonezawa (1995), but not (yet) including preactions. The code is in …/experiments/partial-eval/pe.rkt, with examples near the bottom of the file. See this blog post for a little more about it.

The next step down this line of research is to construct a similar system for an object-oriented programming language, rather than a Scheme-like language; the current plan is to take …/etng-r2 in this direction.

Traits, Composable Functions, and Pattern Matching

…/experiments/traits contains a couple of utterly minimalistic Traits implementations in Scheme. I wrote up what I did, and some of the consequences it led to.

Implementing traits this way led me to an interesting observation: pattern-matching and object-oriented method dispatch (and hash-table lookup, for that matter) are exactly the same thing. Others have made the same observation before, but I’m not sure anyone is taking it in the particular direction that I’m trying for.

Given this observation, if one were to take an ML-like language with decent pattern matching, permit its functions to be composed in a delegation relationship (if one doesn’t match, try the next: this is the traits “override” operator), and provide for open recursion (essentially, the “self” of many object-oriented languages), one would arrive at an object-functional language based on traits. This is the line I’m exploring with …/etng-r2 (with one additional wrinkle: letting the pattern-matching be an OMeta-like general data structure parser; see also unipat.scm).

Generating executable IA-32 machine code directly into memory

A while back, I ordered paper copies of the Intel IA-32 and -64 reference manuals1, and when they arrived, was inspired to try my hand at generating machine code directly into memory. I used an embedded tinyscheme, extended with primitives for creating new primitive-function objects from passed-in bytevectors, as a platform to work from.

The code is in …/experiments/codegen. The source

to the relocating assembler is in

codegen.scm.

There’s a sketch of a direct-to-memory Scheme

compiler near the bottom of the file. I took the information on the

instruction encodings direct from the Intel reference manuals, used

dlsym to link generated code against libc objects, and used

libdisasm-0.23 to

sanity-check my instruction encodings. Writing an assembler wasn’t as

hard as I’d thought it might be, even given the awkwardness of x86

machine code! Making the assembler a DSL within Scheme was also a

win—having ‘instruction combinators’ gives rise to a powerful

macro-assembler without any extra effort on my part.

Equivalences and Hashconsing

Ever since the work I did on Highwire, I’ve been thinking about equivalences. It took me a long time to realise just how fundamental the notion of equivalence is to computing theory and practice. All sorts of beautiful results come from considerations of different behavioural equivalences in the π-calculus world, for example, but more prosaically, careful selection of the equivalences a language specification provides for applications programmers using that language can greatly influence both the ‘feel’ and the efficiency of the language.

Henry Baker’s

egal is an

interesting kind of equivalence. It seems like a good default choice

for a language (is Haskell’s deriving eq egal-like?). Given that

when implementing an object-oriented language like Self or Smalltalk

one is constantly comparing class/map values for equivalence in the

operation of the method caches, and one does not wish to cheat here

and use something awful like direct pointer

comparison2, one approach to building an

efficient egal is to use hash consing in the allocator, which has

the happy outcome of causing efficient direct pointer comparison to

line up with the equivalence we want.

In order to gain experience with hash consing, I built a simple program for comparing the performance of a hash consing allocator with an allocator that did not maintain a sharing table.

The results3

./hashcons unshared --> 81985400 Hz

./hashcons shared --> 41180700 Hz

./hashcons uniform --> 484990000 Hz

confirm the expected outcome:

-

checking for sharing (hash consing) slows down allocation when there is no sharing, in proportion to the size of the object being allocated

-

checking for sharing can significantly speed up allocation when sharing exists!

so it’s by no means a foregone conclusion that the default allocator for a language runtime has to be a non-hash-consing one.

The language prototypes themselves

Essentially, each prototype implementation is a large experiment resulting from the outcomes of a number of smaller experiments. All the prototypes here rely on one or other of my parsing libraries.

…/r1

A first ThiNG spike implementation, borrowing ideas heavily from

Slate. The dispatch algorithm is a Scheme reimplementation of Slate’s

original common-lisp dispatch algorithm (the code is structurally

almost identical). The main difference between the two languages is

that the only available mutable state is through MVars, and that every

subexpression is evaluated in parallel. Have a look at

…/r1/boot.thing to get a feel for the

language. (Note in particular the differences between #map: and

#mapInOrder:.)

This implementation got as far as having image-based persistence, network I/O, a socket-based REPL, and an interface to the SDL graphics output and UI event input frameworks before I decided I’d learnt enough from it and moved on to the next iteration.

r2 and …/r3

r2 was a spike on the way to r3. These two prototypes were the first and last of the fully-lazy variants that I explored. Problems with structuring of effects, and inspiration from Javascript (of all things) and Lua, led me back to eager evaluation for subsequent work. I’d like to return to these variants someday, as the patterns-all-the-way-down idea is intriguingly similar to Jay & Kesner’s Pure Pattern Calculus, and deserves further exploration.

…/etng-r1

The first eager language variant I worked on. Notable points include

use of the period, ., as a quote operator (like Scheme’s quote,

'). This, with curried application, leads to interesting syntax for

method dispatch:

let sock = serverSocket.accept(); ...

which ends up sending .accept, the literal symbol accept, to

serverSocket, and then sending the empty tuple, (), to the result,

finally binding the ultimate result to the variable sock before

continuing. Using . as a quote operator leads to familiar-looking

message-sending syntax for many programmers.

…/etng-r2

After a few flashes of inspiration around traits, pattern-matching, and parsing (mentioned above), I started work on etng-r2 based on etng-r1. This is the first variation in a while to actually have a working evaluator (see …/etng-r2/compile-to-scheme.scm). It’s based heavily on OMeta-style techniques, which are used not just to read and parse the language, but also to perform some of the rewriting steps during compilation. OMeta is a really interesting swiss-army knife in a compiler construction toolkit!

One syntactic tidbit: I tried out a ‘pipe’ operator, which transforms

a | f b into f a b left-associatively, permitting ‘pipeline’

construction:

(1, 2, 3, 4) | s:foldr s:empty s:cons | s:do write;

(1, 2) | s:append (3, 4) | s:do write;

(| s:append (3, 4) | s:do write) (1, 2);

This is the language variant I’m currently still working on.

Other Experiments

…/experiments contains many small and medium-sized experiments used to gain experience to decide how to proceed in a wider context. Among others:

-

A-Normal Form, …/experiments/anf: A compiler converting a core-scheme-like language to ANF, after Flanagan et.al. 1993, “The Essence of Compiling with Continuations”.

-

Experiments in ELF object-file construction, …/experiments/assembly: After discovering Richard Jones’s FORTH system and learning enough about FORTH to port it to PowerPC assembly, I played around with generating executable ELF files from scratch. After all, a self-hosting system will need to ground itself out somewhere, someday!

-

Precedence-parsing, …/experiments/precedence-parsing.scm: I’ve built many different parsing libraries over the years, but somehow never a precedence parser. This one is to get a feel for the technique. I’d like to combine it with an OMeta parser, as having a precedence parser around can be good for letting users add new operators to a language in a safe way.

Next Steps

I’m still itching to try hitting more problems with my partial-evaluation-shaped hammer, as mentioned above. Lots of modern software tricks would, I think, be drastically simplified if expressed in terms of interpreter-style analyses composed via partial-evaluation with the data they’re analysing.

When tracemonkey was announced, I discovered trace-trees/tracing-JIT, which I’d also like to experiment with (perhaps in conjunction with a partial evaluator?).

My work on RabbitMQ and AMQP has caused some interesting thoughts about how eventing is a missing piece of object-oriented programming: one of the first things implemented in almost every object-oriented library is some variation on the observer-observable pattern. How can eventing be integrated more naturally into a programming language setting? What about a networking setting? What about integrating networking itself, being all about message-passing in a distributed system, more closely into programming languages? Functional reactive programming and Coherence are two good points to explore from.

While I haven’t written much on reflection or on virtual-machines, the ideas of metalevel boundaries and process isolation loom large in my thinking. Reading the story here made me think again how isolation of virtual machines, or capability-based security in the context of metaprogramming, could help real users avoid serious problems when carrying out everyday tasks. Craig Latta’s Spoon project and, to a lesser extent, the Hydra Squeak VM are really interesting lines of work in this direction.

All of this is, of course, also deeply connected with my ideas for where I want my OS experiments to go.

-

All you have to do is ask—they mail them straight out to you. It doesn’t even cost any money. ↩

-

assuming that pointer comparison does not perfectly line up with the equivalence one needs ↩

-

Measurement taken on a 2GHz Core 2 Duo Macbook running OS X 10.5.8, using i686-apple-darwin9-gcc-4.0.1 (GCC) 4.0.1 (Apple Inc. build 5465) at

-O3. ↩