Listening to your Webcam

This page is a mirrored copy of an article originally posted on the (now sadly defunct) LShift blog; see the archive index here.

Fri, 25 July 2008

Here’s a fun thing:

The Analysis & Resynthesis Sound Spectrograph, or ARSS, is a program that analyses a sound file into a spectrogram and is able to synthesise this spectrogram, or any other user-created image, back into a sound.

Upon discovery of this juicy little tool the other day, Andy and I fell to discussing potential applications. We have a few USB cameras around the office for use with camstream, our little RabbitMQ demo, so we started playing with using the feed of frames from the camera as input to ARSS.

The idea is that a frame captured from the camera can be used as a spectrogram of a few seconds’ worth of audio. While the system is playing through one frame, the next can be captured and processed, ready for playback. This could make an interesting kind of hybrid between dance performance and musical instrument, for example.

We didn’t want to spend a long time programming, so we whipped up a few shell scripts that convert a linux-based, USB-camera-enabled machine into a kind of visual synthesis tool.

Just below is a frame I just captured, and the processed form in which it is sent to ARSS for conversion to audio. Here’s the MP3 of what the frame sounds like.

Each frame is run through ImageMagick’s “charcoal” tool, which does a good job of finding edges in the picture, inverted, and passed through a minimum-brightness threshold. The resulting line-art-like frame is run through ARSS to produce a WAV file, which can then be played or converted to mp3.

Ingredients

You will need:

- one Debian, Ubuntu or other linux computer, with a fairly fast CPU (anything newer than ca. 2006 ought to do nicely).

- a USB webcam that you know works with linux.

- a copy of ARSS, compiled and running. Download it here.

- the program “webcam”, available in Debian and Ubuntu with

apt-get install webcam, or otherwise as part of xawtv. - “sox”, via

apt-get install soxor the sox homepage. - “convert”,

apt-get install imagemagickor from ImageMagick.

Method

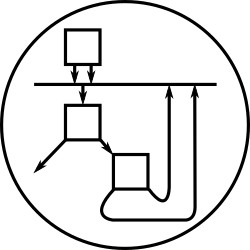

The scripts are crude, but somewhat effective. Three processes run simultaneously, in a loop:

- webcam runs in the background, capturing images as fast as it can, and (over-)writing them to a single file, webcam.jpg.

- a shell script called grabframe runs in a loop, converting webcam.jpg through the pipeline illustrated above to a final wav file.

- a final shell script repeatedly converts the wav file to raw PCM data, and sends it to the sound card.

Here’s the contents of my ~/.webcamrc:

[grab] delay = 0 text = “” [ftp] local = 1 tmp = uploading.jpg file = webcam.jpg dir = . debug = 1

Here’s the grabframe script:

#!/bin/sh THRESHOLD_VALUE=32768 THRESHOLD="-black-threshold $THRESHOLD_VALUE" CHARCOAL_WIDTH=1 LOG_BASE=2 MIN_FREQ=20 MAX_FREQ=22000 PIXELS_PER_SECOND=60 while [ ! -e webcam.jpg ]; do sleep 0.2; done convert -charcoal $CHARCOAL_WIDTH -negate $THRESHOLD webcam.jpg frame.bmp mv webcam.jpg frame.jpg ./arss frame.bmp frame.wav.tmp –log-base $LOG_BASE –sine –min-freq $MIN_FREQ –max-freq $MAX_FREQ –pps $PIXELS_PER_SECOND -f 16 –sample-rate 44100 mv frame.wav.tmp frame.wav

You can tweak the parameters and save the script while the whole thing is running, to experiment with different options during playback.

To start things running:

- In shell number one, run “webcam”.

- In shell number two, run “while true; do ./grabframe ; done”.

- In shell number three, run “(while true; do sox -r 44100 -c 2 -2 -s frame.wav frame.raw; cat frame.raw; done) | play -r 44100 -c 2 -2 -s -t raw -”.

That last command repeatedly takes the contents of frame.wav, as output by grabframe, converts it to raw PCM, and pipes it into a long-running play process, which sends the PCM it receives on its standard input out through the sound card.

If you like, you can use esdcat instead of the play command in the pipeline run in shell number three. If you do, you can use extace to draw a spectrogram of the sound that is being played, so you can monitor what’s happening, and close the loop, arriving back at a spectrogram that should look something like the original captured images.

Comments

On 26 July, 2008 at 5:54 am, wrote:

On 26 July, 2008 at 7:32 pm, wrote:

Brilliant! I’m glad to see an example of someone scripting something good with the ARSS in the middle :-)

I never even thought of such an application, and it made an idea occur to me. Using this, you could very well position the webcam as to film a surface, and on that surface you’d position items, which once run through your script would translate as music, the simplest example being a drum beat. This way you could “edit” the beat by moving items around on the surface (or even drawing, could very well be a blackboard) and hear the loop smoothly looping.

Could make a pretty cool YouTube video ;)

On 28 July, 2008 at 12:30 am, wrote:

This is the kind of thing that Aphex Twin (Richard James) uses on some of his tracks. Your sample track sounds quite similar to some music of his off of the album Drukqs.

On 28 July, 2008 at 5:08 am, wrote:

Brian H : I think it has to do with the fact that Aphex Twin is a talentless hack who just feeds random images (textures and such) to a spectrogram synthesiser and has the nerve to label it music. Yet everybody thinks it’s so great because of the relative novelty of the whole thing.

The annoying part is that I’m doomed to hear people mentioning this glorified unskilled git everytime someone talks about spectrogram synthesis ;-). The fact that a sound produced automatically from a webcam image of an office suffices at reproducing his sort of “music” is a sufficient proof that he’s far from being the musical genius he’s made out to be. The only sort of artist he is is a con artist. (I’m the creator of this ARSS program and I approve this rant).

On 28 July, 2008 at 6:29 am, wrote:

Michel: Thanks for the great software. The blackboard idea is good; much easier to arrange than what we were thinking. Hmm, we have several appropriately-sized whiteboards around the office…

We were thinking along the lines of having a white backdrop with people in black bodysuits, and props that could be held up in front of the camera with high-contrast features drawn on them. Or maybe white backdrop, white bodysuits (so the

people themselves are “invisible”), and solely use the props.

The props would have circles, hexagons, V-shapes etc. painted on them. One interesting one would be a series of parallel lines at the correct spacing to be harmonic intervals for the given resolution; then holding that up and moving it up and down would change the pitch of the tone, left and right would change the onset, and closer and further away would distort the harmonics through the perspective transform of the camera. It might be easier to get a good sound out of it if you added a delay (= visual echo), but that is kind of cheating…

Just some random thoughts, really. I might give your blackboard idea a go.

On 29 July, 2008 at 12:11 pm, wrote:

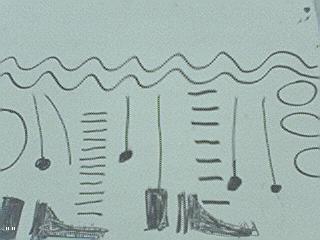

The blackboard (actually, whiteboard) idea worked out well! We pointed our camera at a nearby board, shortened the frame play time to about two seconds, and hooked up the output (via esd) to our jukebox speakers. A crowd quickly grew around the board, and we had a collaborative composition session; here’s a throwaway example of the kind of thing we came up with:

We’re complete novices on this musical instrument; it’ll be interesting to see how we learn.

On 29 July, 2008 at 4:22 pm, wrote:

Hehe, pretty good! Congratulations on inventing and pioneering collaborative spectrogram-based music creation! ;-)

I don’t know if you’re still using the charcoal effect on this one but if you do you could just use the -normalize option instead on top of the invertion (-invert?)

By the way, you did that at work? What kind of job can be cool enough as to let you gather around a few employees to make a techno beat on the whiteboard? ;-)

On 29 July, 2008 at 5:22 pm, wrote:

By the way, you did that at work? What kind of job can be cool enough as to let you gather around a few employees to make a techno beat on the whiteboard? ;-)

On 29 July, 2008 at 5:47 pm, wrote:

Haha, great. However I live in Dublin (Ireland, not Ohio) and I know nothing of Java, .Net or databases. I’m more the embedded type (my CV) although right now I’m looking for sysadmin jobs.

On 1 August, 2008 at 10:23 am, wrote:

Thanks for the -normalize option suggestion - it made a big difference. We’ve also started using the “-distort Perspective” option to select out a region from the webcam frame for rendering, which gives us much more flexibility in how we position the camera.

On 14 August, 2008 at 3:59 pm, wrote:

Hi Tony. Just a quick comment to let you know this is running on a new server now. And, err, test that comments work.

On 22 November, 2009 at 7:44 am, wrote:

I randomly stumbled across another not so awesome but also slightly interesting way: If you try to import a video into Audacity, it comes out as strangle squeaky stuff.

Still, I like this way more :)

On 1 February, 2010 at 3:58 pm, wrote:

[...] provides a great tutorial on how to convert live webcam images into audio, which I’ve used as a starting point for my [...]

On 9 February, 2010 at 9:50 pm, wrote:

[...] environment. As an example, here’s a video of me by Nikki doing a live demonstration of tonyg’s method of combining ARSS and SOX to convert images into [...]

The sound was a lot better than I expected. Thought it would just be close to noise, but sounded a wee bit Dr Who.